This blog is no longer maintained - please visit https://www.qi-fense.com/blog

Applications Are People Too

Application Anthropomorphization

Application Anthropology

Technical Tribal Knowledge

Friday, August 21, 2020

Wednesday, July 10, 2019

Welcome to my INDEX

I realized that I have completely neglected my private blog (this one) in favor of corporate, LinkedIn, and the occasional guest blog – but I still

point readers here from time-to-time – so until I resume publishing posts here first, here’s an index into a cross-section of

my “other” blog posts…

August 14, 2019 Application Risk Landscape (In)Visibility

July 23, 2019 Has your CISO signed off on your 23 NYCRR 500 development practices?

August 14, 2019 Application Risk Landscape (In)Visibility

July 23, 2019 Has your CISO signed off on your 23 NYCRR 500 development practices?

June 25, 2019 Are

Xamarin.Android app users at risk?

October 10, 2018 Rogue

Apps: Facilitating Theft from Developers and Consumers

September 12, 2018 Multi-Year

Developer Survey Reveals Evolving Practices and Foreshadows Further Change

July 30, 2018 Latest

NIST Publications Reinforce the Importance of Application Hardening in Securing

Data

February 8, 2018 An

app hardening use case: Filling the PCI prescription for preventing privilege

escalation in mobile apps

October 19, 2017 Guest blog:

(.NET) App Security - What every dev needs to know

September 20, 2017 GDPR,

DTSA, ETC: App Dev and the law

September 1, 2017 Be

still my beating Heart

August 16, 2017 GDPR

liability: software development and the new law

August 13, 2017 App

dev & the GDPR: three tenets for effective compliance

June 26, 2017 The

Six Degrees of Application Risk

Monday, October 2, 2017

The Six Degrees of Application Risk Originally posted on June 26, 2017

https://www.preemptive.com/blog/article/927-the-six-degrees-of-application-risk/90-dotfuscator

Cyber-attacks, evolving privacy and intellectual property legislation, and ever-increasing regulatory obligations are now simply “the new normal” – and the implications for development organizations are unavoidable; application risk management principles must be incorporated into every phase of the development lifecycle.

Organizations want to work smart – not be naïve – or paranoid. Application risk management is about getting this balance right. How much security is enough? Are you even protecting the right things?

The six degrees of application risk offer a basic framework to engage application stakeholders in a productive dialogue – whether they are risk or security professionals, developers, management, or even end users.

With these concepts, organizations will be in a strong position to take advantage of the following risk management hacks (an unfortunate turn of a phrase perhaps) that reduce the cost, effort, complexity, and time required to get your development on the right track.

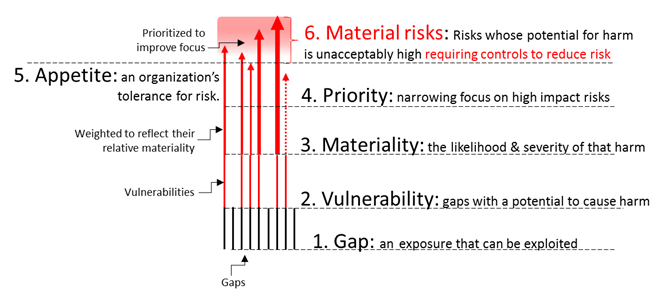

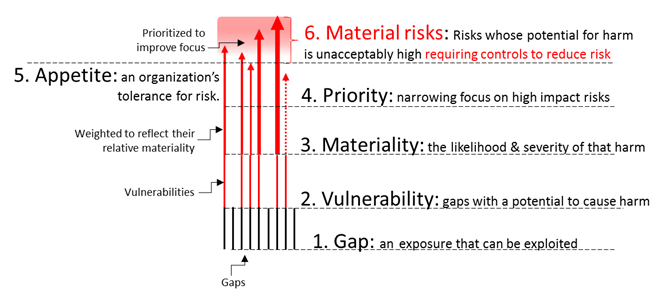

Six Degrees of Application Risk

The following commonly used (and related) terms provide a minimal framework to communicate application risk concepts and priorities.

- Gaps are (mostly) well-understood behaviors and characteristics of an application, its runtime environment, and/or the people that interact with the application. As an example, .NET and Java applications (managed applications) are especially easy to reverse-engineer. This isn’t an oversight or an accident that will be corrected in the “next release.” Managed code, by design, includes significantly more information at runtime than its C++ or other native language counterparts – making it easier to reverse-engineer.

- Vulnerabilities are the subset of Gaps that, if exploited, can result in some sort of damage or harm. If, for example, an application was published as an open source project – one would not expect that reverse engineering an instance of that application would do any harm. After all, as an open source project, the source code would be published alongside the executable. In this case, the Gap (reverse engineering) would NOT qualify as a Vulnerability.

- Materiality is the subjective (but not arbitrary) assessment of how likely a vulnerability will be exploited combined with the severity of that exploitation. The likelihood of a climate-changing impact of a meteor hitting earth in the next 3 years is significantly lower than the likelihood of an electrical fire in your home. This distinction outweighs the fact that a meteor impact will obviously do far more harm than a single home fire. This is why we, as individuals, invest time and money preventing, detecting, and impeding electrical fires while taking no preemptive steps to mitigate the risks of a meteor collision.

- Priority ranking of vulnerabilities helps to ensure that our limited resources are most effectively allocated. Vulnerabilities are not all created equal and, therefore, do not justify the same degree of risk mitigation investment. Life insurance is important – but medical insurance typically is seen as “more material” justifying greater investments.

- Appetite for risk is another a subjective (but not arbitrary) measure. Appetite is synonymous with tolerance. Organizations cannot eliminate risk – but each organization must identify those vulnerabilities whose combined likelihood and impact are simply unacceptable. Some sort of action is required to reduce (not eliminate) those risks to bring them to within tolerable levels. Health insurance does not reduce the likelihood of a health-related incident – it reduces some of the harm that stems from an incident when it occurs. While many individuals have both life and health insurance, there are many who feel that they can tolerate living without life insurance but cannot tolerate losing health insurance.

- Material risks are those vulnerabilities whose risk profile are intolerably high. Material risks are, by definition, any vulnerability that merits some level of investment to bring either its likelihood and/or its impact down to within tolerable levels. Ideally, once all risk management controls are in place, there are no “intolerable risks” looming.

Applying the Six Degrees of Application Risk

Extending these concepts into the development process, at a high level, translate into the following activities:

- Inventory relevant “gaps” across your development and production environments

- Identify the vulnerabilities within the collection of gaps

- Assess and prioritize according to your organization’s notions of materiality

- Agree on a consistent definition of your organizations tolerance for these vulnerabilities (appetite)

- Identify the vulnerabilities that present a material risk

- Select and implement controls to mitigate these risks

- Measure, assess, and correct on an ongoing (periodic) basis

Simple right?

Effective Application Risk Management Hacks

Incorporating any new process or technology into a mature development process is, in and of itself, a risky and potentially expensive proposition.

The threat of increasing development complexity or cost, or compromising application quality or user experience is often motivation enough to maintain the status quo.

Avoid unnecessary waste and risk – follow-the-leaders

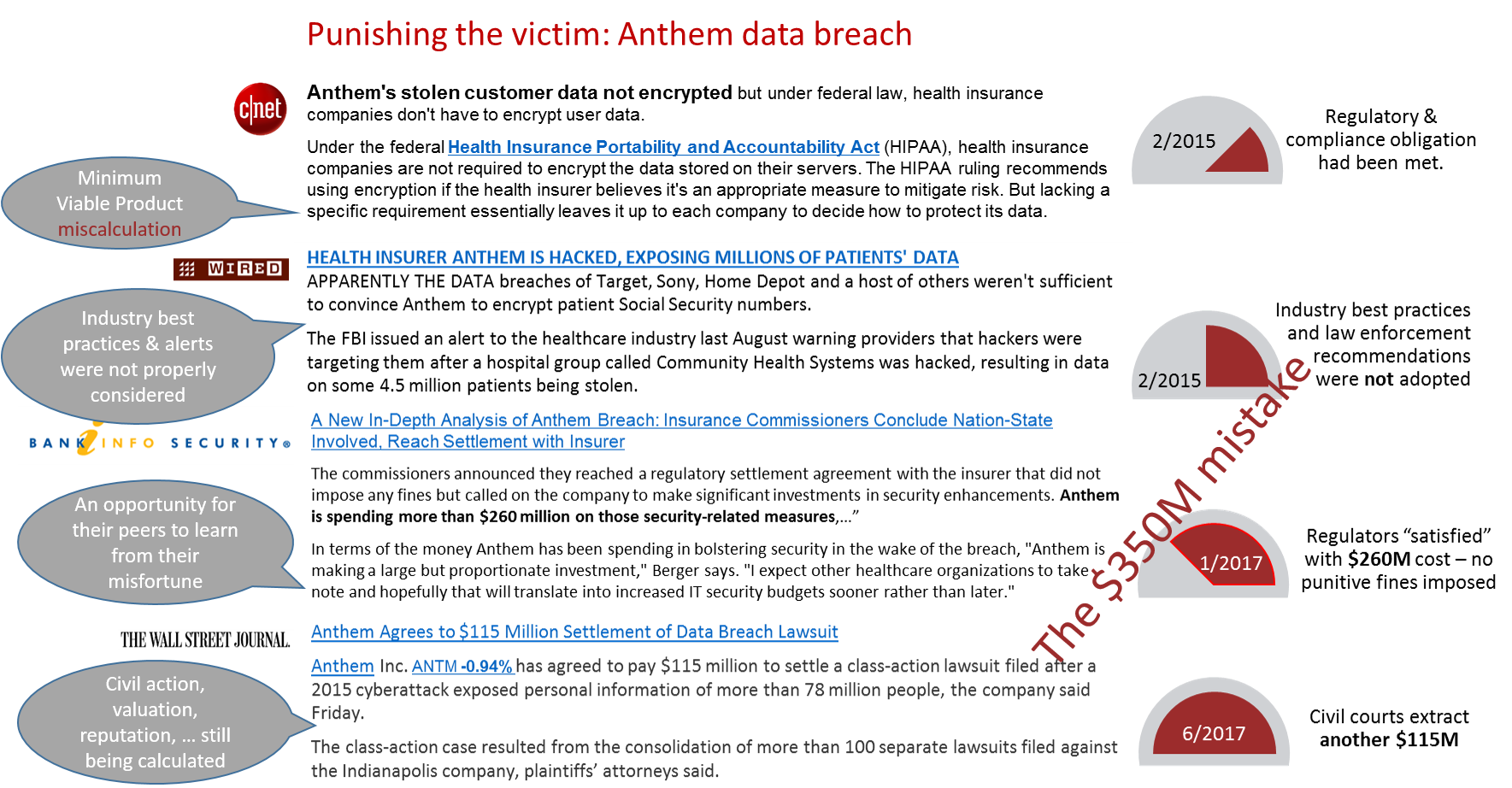

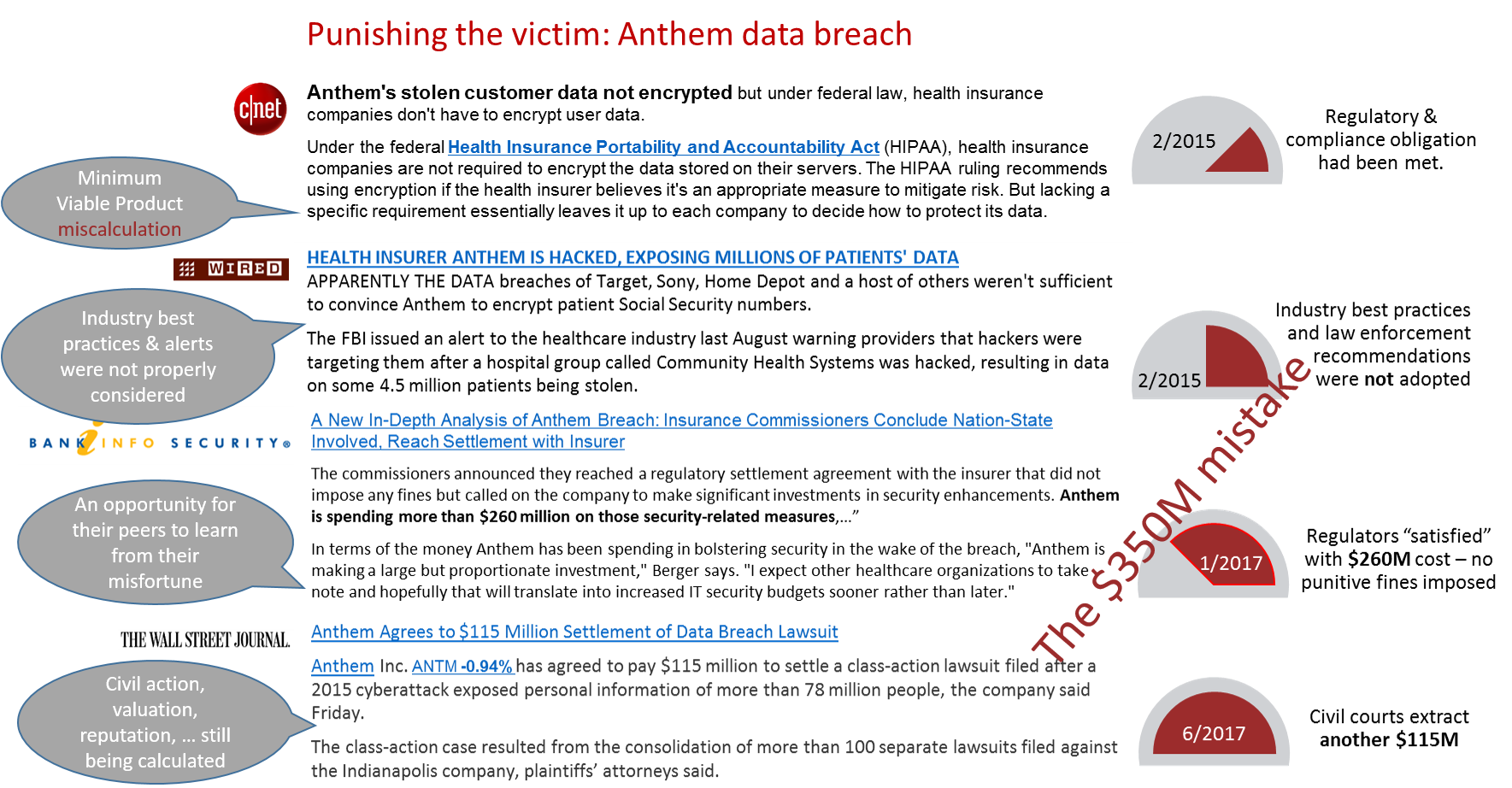

There is an old saying in risk management that “you don’t have to be the fastest running from the bear – you just don’t want to be the slowest.” Hackers mostly attack targets of opportunity and regulators and the courts typically look for “reasonable” and “appropriate” controls. It is often much more efficient to benchmark and adapt the practices of your peers rather than develop your own risk management and security practices from the ground-up. There are many sources from which to choose.

- Benchmark your practices against your organization’s

- peers (similar organizations)

- customers (their risks are often, by extension, your risks)

- suppliers (they are experts in their specialty and/or may pose a risk if they do not live up to your appetite for risk)

- Embrace well-understood and common practices

- Adopt an accepted a standard or open risk management framework.

- Monitor regulatory and legislative developments

- Track relevant breaches and exploits and the aftermath

2nd Sneak Peek: 84% of dev teams fail to secure in-app IP from debugger hacks - and that's not the half of it! Originally posted on October 7, 2016

https://www.preemptive.com/blog/article/893-2nd-sneak-peek-84-of-dev-teams-fail-to-secure-in-app-ip-from-debugger-hacks-and-that-s-not-the-half-of-it/90-dotfuscator

In the first "peek" into our soon to be published application risk management survey results, we shared that 58% of the respondents reported making ongoing development investments specifically to manage “application risk.” See Managing Application Vulnerabilities (an early peek into improved controls for your code and data)

Digging into the survey numbers, respondents divided their “application risk” into six subcategories and in the following proportions:

| Risk Subcategories | % of respondents reporting app risk |

|---|---|

| Intellectual property (IP) theft from code analysis (via reverse engineering) | 38% |

| Data loss and (non-application) trade secret theft | 37% |

| IP theft through app abuse (elevated privilege, unauthorized data access, etc.) | 36% |

| Operational disruption (malware, DDoS, etc.) | 32% |

| Regulatory and other compliance violations (privacy, financial, quality, audit, etc.) | 26% |

| Financial theft | 18% |

It’s important to keep in mind that the risks enumerated above are NOT synonymous with technical vulnerabilities; there are multiple paths that a bad actor can take (for example) to “misappropriate” IP and trade secrets – multiple technical vulnerabilities to exploit – and multiple non-technical vulnerabilities too of course (social engineering, armed robbery, etc.).

The table above shows that, while financial theft is surely among the most significant risks most any business faces, only 18% of the development teams in our survey work on applications where attacks against their applications in particular might reasonably lead to financial theft.

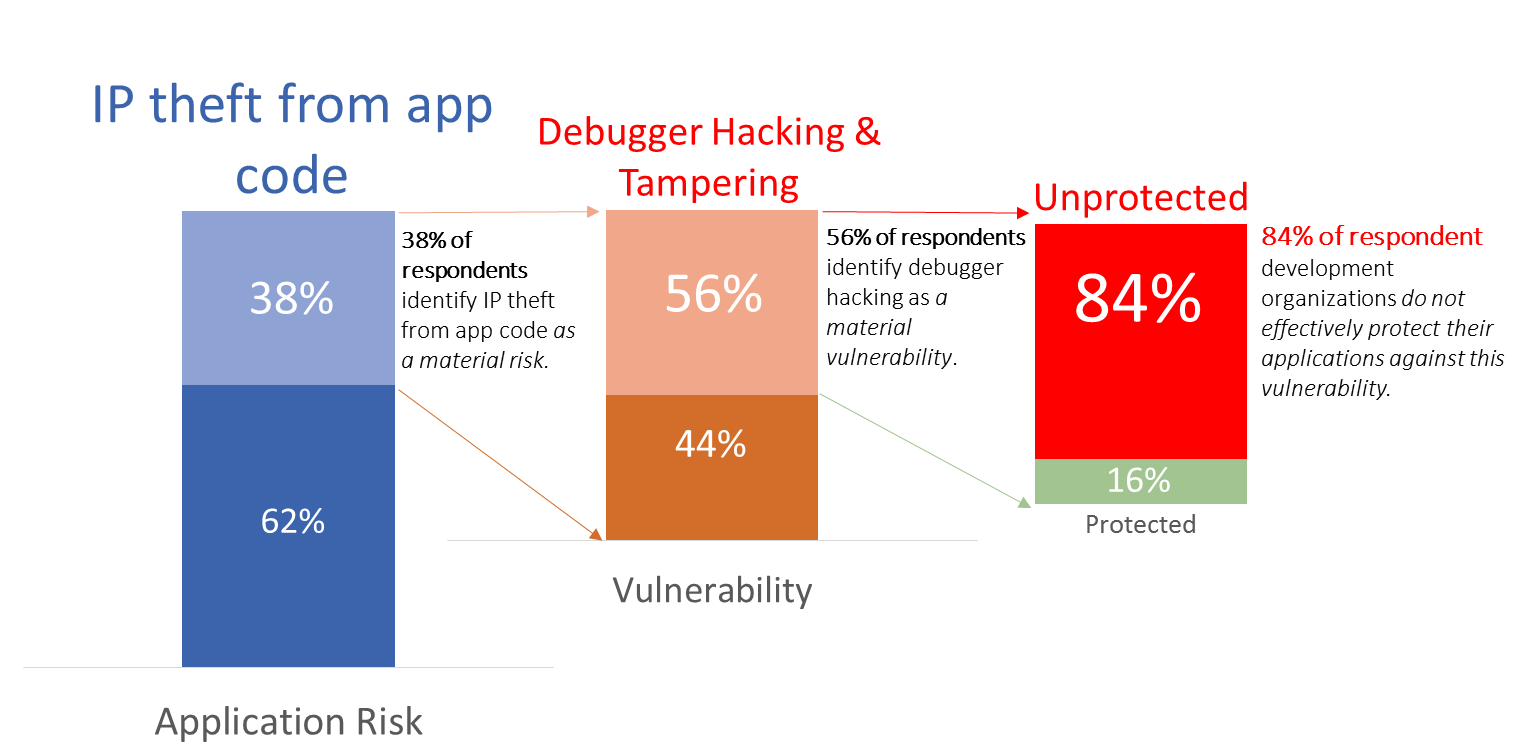

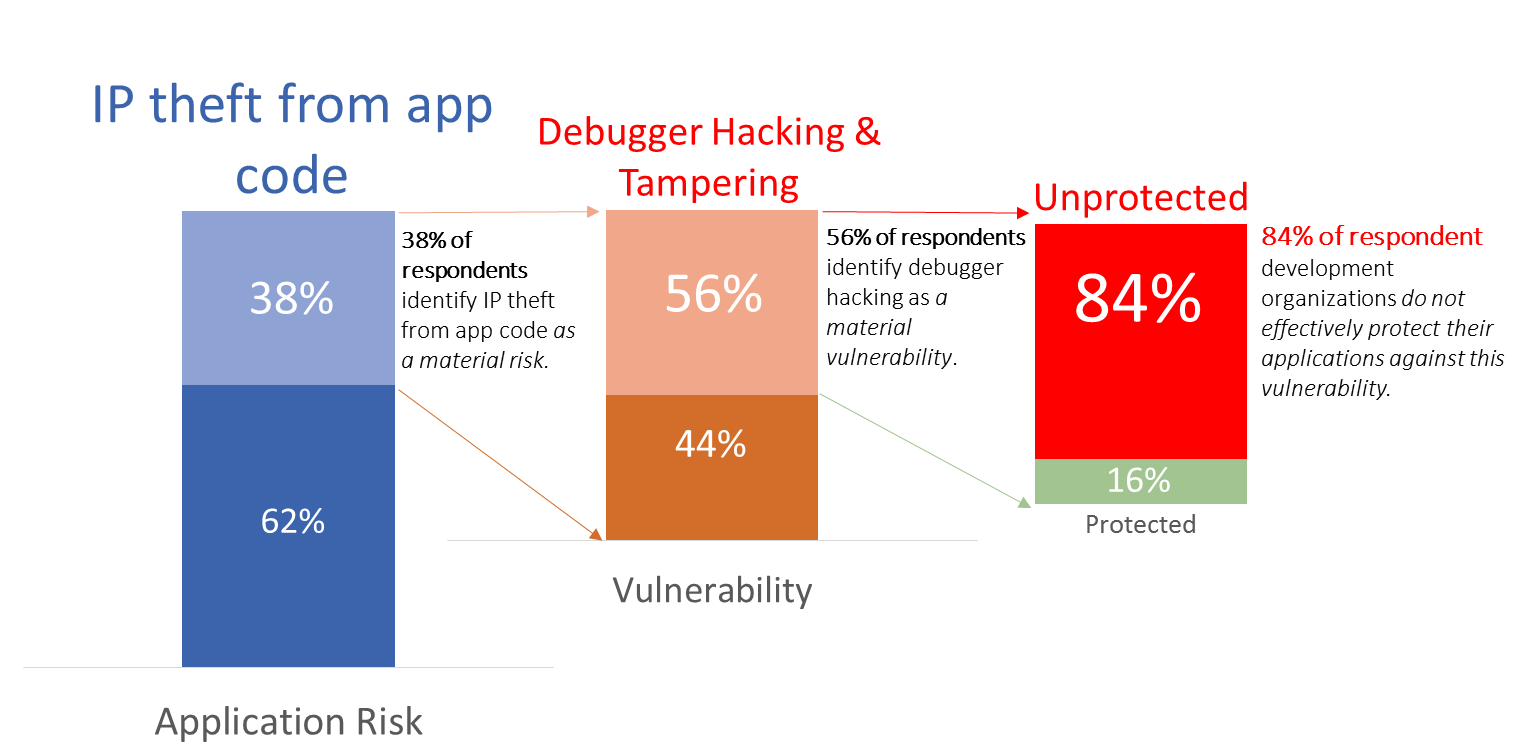

Production debugger use for hacking and tampering left unchecked

The survey showed that, while development teams were invested in mitigating these six application risk categories, a majority of development teams did not have effective controls to prevent one specific technical vulnerability; the unauthorized use of a debugger against applications running in production.

In fact, in every risk category, the majority of development teams:

- Recognized that this kind of debugger attack IS a material threat, AND

- Acknowledged that they DO NOT have adequate controls in place to mitigate this threat.

For illustration, lets dig deeper into one of the six risk categories to see how this pattern plays out.

Digging deeper: IP theft from code

The chart below shows that 84% of respondents who identified IP theft from their code as a material risk also identified production debugger hacking as a significant and Unprotected technical vulnerability.

Risks are like potato chips; you can ever have just one

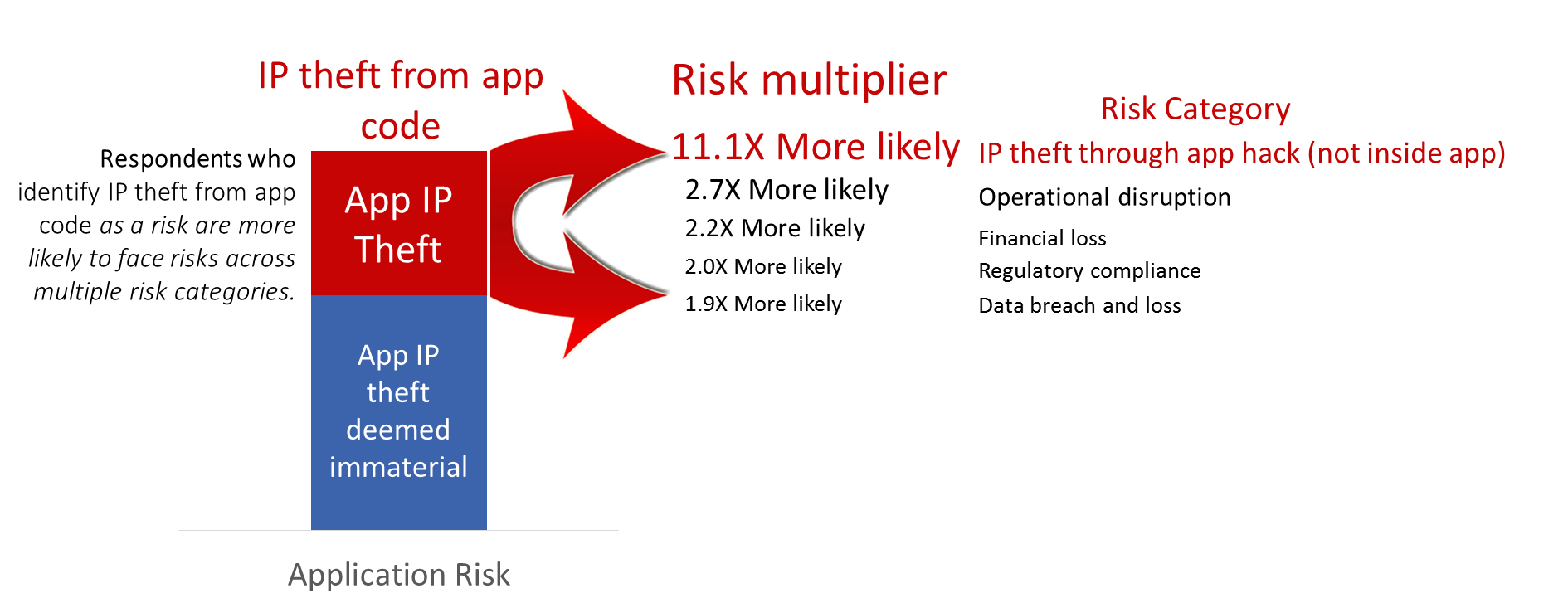

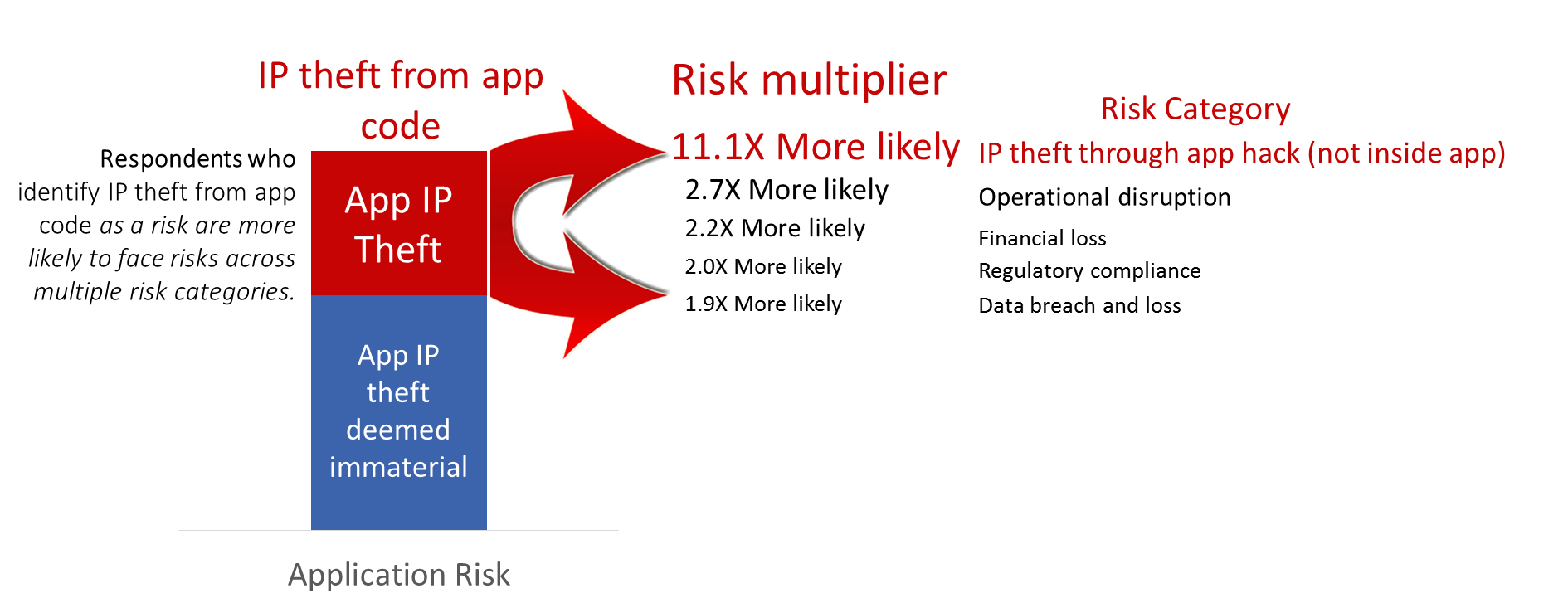

Unmanaged technical vulnerabilities are never a good thing, but this gets exponentially worse if a single vulnerability increases risk across multiple risk categories rather than just one. …and, according to our survey respondents, failing to prevent production debugger hacking most definitely falls squarely into this category.

To further raise the stakes for development teams, our survey clearly showed a strong correlation across risk categories. In other words, once an application has the potential to pose one kind of risk, it is extremely likely that it will pose a risk across multiple categories – thus increasing the potential damage of unchecked technical vulnerabilities like production debugger hacking.

Digging (even) deeper: IP theft from code risk as a leading indicator for additional application risks

According to our respondents, apps that have IP inside code that need protecting are much more likely to pose additional risks as well.

What's the take-a-way from the illustration above?

If you're protecting IP inside your app - you're over 11 times more likely than other development groups to ALSO have IP at risk from app attacks even though that IP lives outside of your app. ...and you're roughly 2X more likely to face risks across the remaining four application risk categories...

Also, if you've got un-managed technical vulnerabilities - to the extent that these vulnerabilities may factor into multiple risk categories, the danger each vulnerability poses is likely to be many times greater than you suspect.

If you’re interested in getting the final numbers (and an even deeper dive into both the risks and controls to effectively mitigate these risks), I expect to be publishing results in the next 1-2 weeks HERE (there's already a link to a related white paper on this page for download too so check that out now if you like).

Trade Secrets and Software: don’t give one up for the other Originally posted on August 5, 2016

https://www.preemptive.com/blog/article/867-trade-secrets-and-software-don-t-give-one-up-for-the-other/90-dotfuscator

The true value of trade secrets – as with any class of intellectual property – is directly proportional to the owner’s ability to enforce their rights through criminal and civil actions.

For the first time, under the recently enacted Defend Trade Secrets Act, a company can pursue claims for trade secret theft in a US federal court and seek remedies such as a seizure order to recover stolen secrets plus get compensated for damages and potentially impose punitive fines as well (making trade secret theft protection on par with other forms of intellectual property infringement i.e., patent, copyright, and trademarks).

However, to take full advantage of these remedies, companies must identify trade secrets in advance and implement reasonable secrecy measures to protect them.

Applying these general rules to application development and operations requires a specialized legal strategy further buttressed by “technical foresight,” e.g. an enhanced DevOps process.

The following videos offer application stakeholders:

- An overview of the legal requirements to successfully protect their rights in a court of law (Winning a Theft of Trade Secrets Action - 5 minutes),

- A dual-pronged methodology that combines legal strategies with technical foresight to meet those requirements (Protecting Trade Secrets through Legal Strategy & Technical Foresight - 9 minutes), and

- Technology to effectively meet these regulatory obligations while also materially improving application and data security (Android Application Risk Management and Protection: Before, During, and After the Hack - 10 minutes).

For a general framework on how to manage application risk and value, see Application Risk Management in a nutshell - 8 minutes.

Defend Trade Secrets Act codifies “open season” on app reverse engineering Originally posted May 13, 2016

https://www.preemptive.com/blog/article/851-obama-signs-trade-secret-legislation-codifying-an-open-season-on-app-reverse-engineering/91-dotfuscator-ce

Code obfuscation and the doctrine of “contributory negligence”

On May 11, 2016, President Obama signed the Defend Trade Secrets Act of 2016.

Enjoying unprecedented bipartisan support (Senate 87-0 and the House 410-2), this bill expands trade secret protection across the US and substantially increases penalties for criminal misconduct – and what could go wrong with that?

After all, according to the Commission on the Theft of American Intellectual Property, the theft of trade secrets costs the economy more than $300 billion a year. …and, thanks in large part to technology, trade secrets have never been easier move, to copy, and to steal. In fact, in their 5 year strategic plan, the FBI labeled trade secrets as "one of the country's most vulnerable economic assets” precisely because they are so transportable.

…and nothing in today’s world is more mobile than application software

If you were to assume that this bill has been custom-tailored to protect the trade secrets embedded in application software - you would be in good company

In her most recent blog post praising the Defend Trade Secrets Act, Michelle K. Lee, Under Secretary of Commerce for Intellectual Property and the current USPTO Director writes, "No matter the industry, whether telecommunications or biotechnology, traditional or advanced manufacturing or software, trade secrets are an essential driver of innovation and need to be afforded proper protections.” … “Trade secret owners now also have the same access to federal courts long enjoyed by the holders of other types of IP.”

...but do we really? Do software developers really now "enjoy the same access to federal courts?" Sort of – maybe – OK – maybe not.

I’ll be writing a lot about this topic in the coming weeks and months, but, for now, let’s just drop to the bottom line. Without special care, Application owners have been stripped of every protection granted under the Defend Trade Secrets Act (DTSA).

Let me explain. The DTSA applies exclusively to VALUABLE information that is both SECRET and has been STOLEN (the legal term is “acquired through Improper Means”).

Developer ALERT: The DTSA explicitly EXCLUDES reverse engineering as an improper means. The DTSA states that Improper Means DOES NOT include “reverse engineering, independent derivation, or any other lawful means of acquisition.”

Is this an oversight? Did the legal staff of the Senate Judiciary Committee (who authored this bill) accidentally use this overloaded development term?

The answer is an unequivocal no – the exclusion of reverse engineered software is intentional and by design.

I recently found myself in a briefing on Capitol Hill with senior legal counsel inside the Senate Judiciary Committee (the agenda was encryption that day – not trade secrets) – but I asked this question directly – “Did the committee intentionally include language that would exempt any intellectual property that could be accessed via reverse engineering of applications?” He did not hesitate – in fact, to be honest, he was emphatic. “Yes” he said, “if I can see your IP with a reverse engineering tool – it’s mine.”

OUCH – is this the end of days? Is every algorithm and process embedded in your software officially free for the taking?

Thankfully – no – it’s not nearly that dire.

First – whether or not your IP is covered under this law – obfuscating .NET, Android, Java, or iOS apps make reverse engineering much harder. Code obfuscation will prevent – or at least reduce the number of times that your IP is lifted through reverse engineering.

The real question is whether application obfuscation can be used to extend the protections of the DTSA to include application software in a court of law.

“Reasonable Efforts” and “The Doctrine of Contributory Negligence”

How do you ensure employees don’t publicize your textual and image-based trade secrets (and exempt these from protection as well)?

You make sure employees know that they are secret through clear markings, communication, and education – and you secure relevant documents with physical and electronic locks. These are called “affirmative steps” that demonstrate concrete efforts to preserve confidentiality.

Failure to take these kinds of reasonable efforts lead to The Doctrine of Contributory Negligence.

This “doctrine” captures conduct that falls below the standard to which one should conform for one’s own protection. When you fall below this standard, courts will often treat your information as public – and, to the extent you rise above that standard – courts are typically more willing to accept both the secret nature and the value of the IP in question.

Unfortunately, applications are not documents - and so standard “electronic and physical locks” do not apply.

However, code obfuscation does apply here. Obfuscation is a well-understood, widely practiced, and recognized practice to prevent reverse engineering. Code obfuscation does not guarantee absolute secrecy – but it is unquestionably recognized as a “reasonable step” to preserve secrecy – it’s a lock on a front door that sends an unmistakable message to anyone who approaches – if I’m obfuscated – keep out.

Will development organizations who fail to include basic code obfuscation fall prey to the ominous sounding “Doctrine of Contributory Negligence?”

Can application obfuscation send a clear enough message to the courts to bring back trade secret theft protection under the newly minted Defend Trade Secrets Act?

These and other pressing Intellectual Property questions will be answered in upcoming episodes of “As the IP World Turns” (or, more realistically, my next blog post)

In the meantime, don’t forget to take reasonable precautions to protect any potential software trade secrets from reverse engineering.

Reconciling GooglePlay's security recommendations with Xamarin deployment Originally posted February 25, 2016

https://www.preemptive.com/blog/article/837-reconciling-googleplay-s-security-recommendations-with-xamarin-deployment/90-dotfuscator

An app control that both Microsoft and Google can get behind? What about Xamarin?

First - Congratulations Xamarin (and Microsoft) - as someone who has used Xamarin personally and worked with the people professionally, I see this as a win-win-win (for Xamarin, Microsoft, and, last but not least, developers!).

To the topic at hand... One might argue that the phrase "GooglePlay security recommendations" is a contradiction in terms or even oxymoronic - but I take a different view. If (EVEN) Google recommends a security practice to protect your apps - then it must REALLY be a basic requirement - one that should not be ignored.

I'm talking about basic obfuscation to prevent reverse engineering and tampering.

Here's an excerpt from Android's developer documentation

"To ensure the security of your application, particularly for a paid application that uses licensing and/or custom constraints and protections, it's very important to obfuscate your application code." ...and they go on to write "The use of ProGuard or a similar program to obfuscate your code is strongly recommended for all applications that use Google Play Licensing." (I did NOT add the emphasis)

For those unfamiliar with ProGuard - it's a free/open source obfuscator - quite a good one really for the money ;) - but seriously - it's kind of an analog to Dotfuscator Community Edition included with Visual Studio (also for free). The point being that both Google and Microsoft have long recognized that basic controls to prevent reverse engineering need to be ubiquitously available to every developer (no one is suggesting all apps be obfuscated).

...but what about Xamarin apps targeting Android or iOS? ...not so much. ProGuard cannot obfuscate Xamarin apps - nor can any of the other native Java/Android obfuscators (including PreEmptive's own DashO). ...But (good news) Dotfuscator Professional can. ...But (bad news) it's not free. Still, if you're serious about this topic, you'd probably want something other than the "free version" on either platform. Here's a link to a PreEmptive blog post on how to protect your Xamarin apps with Dotfuscator (both iOS and Android): Xamarin Applications and Dotfuscator.

Question: Given the Microsoft Xamarin acquisition, should we (PreEmptive/Microsoft) consider extending Dotfuscator CE (the free one) to provide comparable protection to Android and iOS apps generated by Xamarin as we do for .NET apps today (and since 2003)?

Let me know your thoughts - I really do want to hear from Xamarin developers (and the app owners that employ them :).

GET THIS DEVELOPMENT QUESTION WRONG – AND YOU MAY WELL BE AT RISK. originally posted November 19, 2015

https://www.preemptive.com/blog/article/827-get-this-development-question-wrong-and-you-may-well-be-at-risk/91-dotfuscator-ce

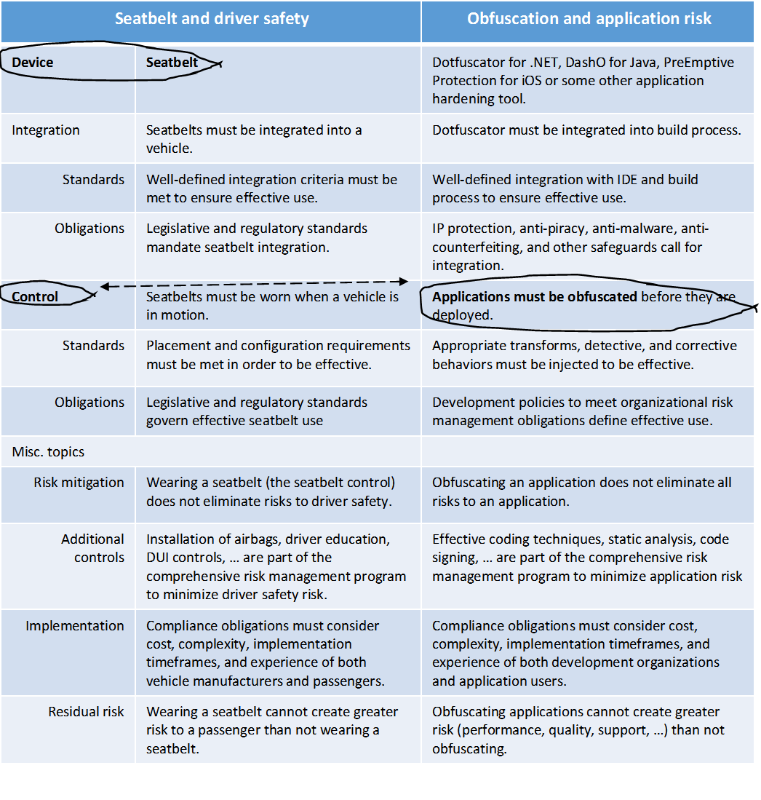

Question: True or False, Seat belts are to Driver Safety as Obfuscation is to Application Risk Management

The correct answer is FALSE!

The equivalence fails because a seat belt is a device and obfuscation is a control. Why might you (or the application stakeholders) be in danger? First, read through the key descriptors of these two controls.

Table 1: contrasting application risk management with driver safety risk management.

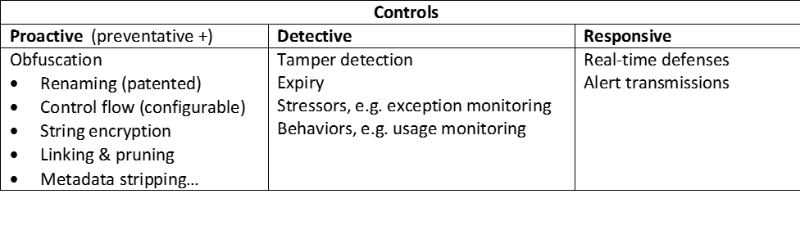

To pursue application development opportunities as aggressively as possible (but not too aggressively to create unnecessary risk), organizations must also manage application threats and risks through a mix of proactive, detective and responsive controls;controls that are, in an ideal scenario, supported by strong analytics and based on strategic objectives, risk appetite and capacity.

If your organization has not settled on objectives, organizational risk tolerance, and what levels of investment you’re prepared to make to achieve these objectives, you can’t possibly have an effective risk management program.

Effective application risk management;

Consistency and efficiency requires sustained investments in the following:

Implement an effective feature set aligned with control categories (proactive, detective, and responsive).

Effective risk management supports all three control “dimensions.”

Table 2: Mapping of application hardening features to three categories of control.

This is not an exhaustive list of techniques and technologies to secure applications; and feature “bake-offs” are always suspect. However, if you don’t assess your risk (which has nothing to do with how easy it is to exploit an application vulnerability), you won’t know if a normal 3 point seat belt is sufficient (for a mainstream car) or if you need a child seat or a 5 point harness required by NASCAR.

Quality

As “the last step” before digital signing and application distribution, quality issues that may arise have the potential to have catastrophic impact on deployment and production application service levels.

Timeliness

Three factors drive release cycles for PreEmptive Solutions application protection and risk management products; the latter two are unique to the larger security and risk management category.

- New product features and accrued bug fixes: this is typically the sole driving force for new software product releases.

- Updates to OS, runtime, and specialized runtime frameworks: delayed support for new formats and semantics would result in delays in developer support for those platforms or will force poor risk management practices on the platforms that most likely need protection most of all.

- Emergence of new threats and malicious patterns and practices: as with anti-virus software, bad actors are constantly searching for ways to circumvent security controls. Without consistent tracking of this activity and timely updates to react to these developments, application security technology can quickly be rendered as obsolete.

Low friction

In order to be effective and consistently applied, the configuration and implementation of proactive, detective, and corrective controls cannot require excessive time or expertise. Specific areas where PreEmptive Solutions invests to reduce development and operational friction include:

- Automated detection and protection of common programming frameworks, e.g. WPF, Universal Applications, Spring, etc.

- Custom rule definition language to maximize protection across complex programming patterns at scale.

- Specialized utilities to simplify debugging of hardening applications.

- Automated deployment: support for build farms, dynamically constructed virtual machines, command line integration, MSBuild, Ant, etc. come standard with PreEmptive Solutions’ professional SKUs.

- Cross-assembly hardening to extend protection strategies across distributed components and for components built in different locations and at different times.

- Support for patch and incremental hardening to minimize and simplify updates to hardened application components.

Responsive support

Should critical issues arise, live support can prove to be the difference between applications shipping on time or suffering last-minute and unplanned delays.

Vendor viability

Applications can live in production for years – and with extended application lifecycles comes the requirement to secure these applications across evolving threat patterns, runtime environments, and compliance obligations.

Friday, October 9, 2015

EU's highest court throws out privacy framework for US companies: small businesses suffer

Three ways that small tech businesses are just like every other small business – except when we’re not

Here’s the issue; small tech companies have all of the awesome characteristics of small businesses in the broadest sense (they’re job creators, innovators, revenue makers…) but they often find themselves having to navigate complex regulatory and compliance issues that have historically been reserved for (large) multi-national corporations – all while building their businesses on technology that’s evolving way faster than the regulations that govern them. (As a footnote here, let me throw in a commercial plug for ACT – a trade association focused on exactly these issues).

Tuesday's nullification of the Safe Harbor framework (a system that streamlined the transfer of EU user data to US businesses) in what everyone pretty much agrees was a consequence of the NSA spying scandals is a perfect example. In this case, we see how small tech businesses can get caught in the middle of a p^%*ing match between the EU and the US federal govt. …and I don’t care what side of the aisle you’re on – everyone loves small business growth and innovation right?Here’s a great bipartisan issue that our lawmakers should be able to address – don’t you think?

Three ways that small tech businesses are just like every other small business – except when we’re not

One: Like every small business, we can’t afford to have a permanent team of lawyers on our payroll …but small tech businesses can go international overnight - having to navigate across international jurisdictions.

The Safe Harbor system eliminated a raft of complexity and potentially 1000’s of hours of legal work required to manage EU user data – making it feasible for small tech businesses to do business inside the EU.

Small businesses simply cannot be expected to navigate a maze of international privacy obligations – each with their own rules – and penalties. Without the Safe Harbor system (or something to replace it), previously open markets will soon be out of reach.

Two: Like every small business, we often rely on 3rd party service providers for professional services (legal, payroll, HR, etc.) …but small tech business also rely upon 3rd party providers for services rendered inside their apps (versus inside their offices) while those apps are being used by their clients; for example, payment processing and application analytics.

This distributed notion of computing introduces multiple layers of business entities at the very sensitive point where the application is being used in production – exponentially expanding the legal and compliance problems (each service provider must also have their own agreements within each country/jurisdiction).

This is now more than just unmanageably large and expensive –it’s potentially unsolvable. Small businesses deal with lots of unknowns, (security vulnerabilities for example), but this new wrinkle will almost certainly have a chilling effect – either on how we serve EU markets AND/OR how we rely on 3rd party service providers (a core development pattern that, if abandoned, would make US dev firms less competitive).

Three: Like every small business, small tech companies cannot change direction with the swipe of a pen the way laws and regulations can come and go.

While the Safe Harbor framework was instantaneously nullified with one verdict, applications that were compliant moments before are now potentially in jeopardy – and they’re still running and still sending data – whether the app owner likes it or not.

Bottom line, this is a regulatory and governance issue and we need governments to work out……

Everyone loves small businesses right? We need…

- To know what’s expected of us

- Agreement on what compliance looks like

- Visibility into enforcement and penalty parameters

Then, we can do what we know how to do – make smart technical and business development investments.

Other material

Here are three more links:

Two days ago, when the Safe Harbor ruling first came down, I posted an explanation of how (Link 1) PreEmptive Analytics can re-direct application usage data to support the kind of seismic shifts in architecture that might follow (Link 2) here.

That same evening, I was put in touch with Elizabeth Dwoskin, a WSJ reporter who was writing a piece on the impact that this sudden move would have on small businesses – my conversations with her are actually what prompted this post (WSJ has already posted her well-written article,(Link 3) Small Firms Worry, as Big-Data Pact Dies).

You might ask, if her article is so well-written (which it is), why would I have anything to add? She was looking for a “man-on-the-street” (dev-in-the-trenches) perspective on this one particular news item, BUT, the Safe Harbor ambush is just one example of the larger issues I hope I was able to outline here.

How will today's Safe Harbor ruling impact users of multi-tenant application analytics services?

Earlier today, the Safe Harbor system was just overturned (see Europe-U.S. data transfer deal used by thousands of firms is ruled invalid).

The legal, operational, and risk implications are huge for companies that have, up until today, relied on this legal system (either directly or through third parties that relied on Safe Harbor) to meet EU's privacy obligations.

What are the implications for application analytics solutions (homegrown or commercially offered)? It's not clear at this moment in time, but one thing is for sure - it is a lot harder to turn off an application, re-architect a multi-tier system, or force an upgrade than it is to simply sign a revised privacy agreement.

Multi-national companies that continue to transfer and process personal data from European citizens without implementing an alternative contractual solution or receiving the authorization from a data protection authority are at risk for legal action, monetary fines, or a prohibition on data transfers from the EU to US.

If this transfer of data is embedded inside an application/system's architecture - then a wholesale development/re-architecture plan may be required. Of course, re-architecting systems to keep data local within a country or region, may simply be impossible (efficiency, cost effective, ...) UNLESS the system is, itself, built to provide that kind of flexibility already.

Happily, PreEmptive Analytics is.

- PreEmptive Analytics endpoints (in addition to on-prem of course) can live inside any Microsoft Azure VM. Clients with very specific requirements as to where their actual VM’s are being hosted would always be able to meet those requirements with us. …and what about when a client gets even more specific (country borders for example) or when they want to support multiple jurisdictions with one app? (this leads to the second point…)

- PreEmptive Analytics instrumentation supports runtime/dynamic selection of target endpoints. While this would take a little bit of custom code on the developer’s part – our instrumentation would allow an application – at runtime – to determine where it should send it’s telemetry (perhaps a service that is called at startup that has a lookup table – if the app is running in Germany – send it to …, if it’s in China, send it to …, if it’s in the US…). This would allow an app developer with an international user base to support conflicting privacy and governance obligations with one application.

It may turn out that keeping German application analytics data in Germany may be as important to US companies now as it is to German companies. One thing's for sure - the cadence and road map for application analytics cannot be tied to the cadence and road map of any one application - the two have to live side-by-side - but independently.

Subscribe to:

Posts (Atom)